Why Use AI Infrastructure as a Service?

)

As businesses race to integrate artificial intelligence (AI) solutions into their operations, many face a common challenge: building and maintaining the complex infrastructure needed to support scalable AI workloads.

This is where AI infrastructure as a service (IaaS) enters the picture, offering organizations a powerful way to access high-performance computing, storage, and networking resources without the burden of managing physical hardware. It enables enterprises of all sizes to focus on building and deploying models and applications instead of managing frameworks.

However, delivering AI effectively for business outcomes is not simple. Renting time on frontier models is costly, can expose sensitive data, and may even put your business model at risk of competition from those same model providers. Meanwhile, the fast-growing ecosystem of open source and specialized models offers powerful alternatives, but using them requires the ability to host and manage your own AI infrastructure.

As organizations explore the full potential of AI, understanding how to effectively leverage infrastructure as a service becomes more essential than ever. This guide explores what AI IaaS is and provides an implementation roadmap to help enterprises get started.

Key highlights:

AI infrastructure as a service (IaaS) removes burdens by providing scalable, high-performance environments for model training, inference, and deployment.

Purpose-built AI infrastructure (Neoclouds) delivers more predictable latency, better GPU utilization, and stronger control over data location compared to general-purpose cloud platforms.

Building custom infrastructure offers greater flexibility and compliance control, but it comes with higher costs and complexity, making it suitable only for certain enterprise use cases.

Mirantis k0rdent AI offers a powerful middle ground, combining the scalability of AI IaaS with the freedom and configurability of open-source infrastructure, optimized for secure, multi-cloud AI workloads.

What is AI Infrastructure as a Service?

AI infrastructure as a service is a cloud-based approach (private or public clouds) that provides on-demand access to the GPUs, compute, storage, and specialized tools needed to run AI workloads. It’s important to understand, however, that while hyperscale public cloud providers all make GPU nodes available today, true AI IaaS clouds (also called ‘Neoclouds’) are architecturally quite different.

True AI IaaS clouds are purpose-built for hosting at scale, providing the latest GPUs, TPUs, and other specialized compute from key vendors like NVIDIA, typically in dense arrangements with big power requirements.

AI applications may also need to:

Ingest vast amounts of data, greater than can be transmitted by the internet or even dedicated long-haul fiber links, in a reasonable time

Communicate and function with predictable latency

Provide strict security and sovereign control over data and model location

All these factors place sharp constraints on where Neoclouds can be built: close to affordable power (or equipped with power-generation and distribution facilities of their own), close to (or co-located with) data, and close enough to customers for predictable performance.

IaaS clouds also have unique internal architectures conforming to the requirements of AI technology training and inference workloads, including dedicated ultra-high-speed fiber-optic network backplanes enabling Remote Direct Memory Access (RDMA) among GPUs (in effect, distributed shared memory).

On top of all this, there are purpose-dedicated hardware stacks of middleware enabling GPU sharing among applications running on virtual machines or in containers: critical for good utilization and cost management (GPUs are a hugely expensive resource).

A sophisticated environment orchestration layer abstracts and manages this complex stack of hardware and software, letting platform engineers dial in, deploy, and lifecycle manage the AI model and application hosting environments (typically based on Kubernetes) that customers will see.

The customer-facing environments host:

Data-science, ML, and Inference-oriented platform-as-a-service solutions,

AI frameworks like TensorFlow and PyTorch,

Pipelines for model building, model version control

Other application components and services

Benefits of Using AI Infrastructure as a Service

Leveraging true AI infrastructure as a service can offer several strategic advantages. Smaller companies and startups may be obliged to use AI IaaS/Neoclouds to get in the game at all, since extreme demand makes latest-generation GPU hardware prohibitively expensive (or simply unavailable) in small quantities. Hardware is also both expensive and prone to rapid obsolescence, particularly GPUs.

AI IaaS offers a range of benefits, including reduced hardware costs and faster model deployment. Here’s how it helps teams move faster and scale smarter:

| Benefit | How AI IaaS Delivers |

| Cost Efficiency | Pay-as-you-go pricing reduces capital expenses for hardware and infrastructure |

| Scalability and Flexibility | Dynamic resource allocation to match changing workload demands |

| Faster Time to Market | Pre-configured environments and high-performance compute shorten development cycles |

| Access to the Latest tech | Continuous hardware and software updates by the provider keep the infrastructure cutting-edge |

| Focus on Innovation | Removes the burden of infrastructure management, allowing teams to concentrate on AI R&D |

While there are significant benefits, AI IaaS cannot single-handedly address the complex requirements of large-scale, business-critical deployments. As the infrastructure as a service landscape matures, new solutions are emerging that further empower organizations.

These new solutions enable enterprises to place AI/ML infrastructure wherever it best serves their needs, whether:

On-premises for data sovereignty

At the edge for performance and to serve edge-specific use-cases

Across multiple clouds for access to specialized hardware or cost optimization

Open, flexible approaches to AI also help organizations align with the fast-moving open source AI ecosystem, instead of being locked into a single vendor’s architecture or pricing.

How Does AI Infrastructure as a Service Work?

At its core, AI cloud infrastructure functions by abstracting the physical components of AI processing and delivering them through the cloud. The compute layer consists of virtualized or bare-metal instances powered by GPUs or TPUs optimized for AI workloads.

These instances are configured to handle a wide range of model complexities, from small-scale experiments to massive deep learning jobs. Data is managed via high-throughput storage systems that ensure fast access and retrieval, accommodating large-scale datasets essential to AI projects.

On top of that, the software stack includes containerized environments preloaded with:

Common AI/ML libraries

Frameworks

Orchestration tools

This makes it easier for teams to deploy and scale applications without spending time on configuration. Developers interact with these services through APIs and SDKs, often integrated with DevOps pipelines to streamline the transition from prototype to production. This is particularly important when running models via Inference as a Service, where performance, latency, and scalability are critical.

Enterprise-grade security features, including encryption, access controls, and compliance support, ensure the system remains secure. This entire setup forms a robust foundation that supports even the most demanding generative AI infrastructure needs.

AI Infrastructure as a Service Use Cases

The flexibility of AI infrastructure as a service supports a diverse set of use cases, making it a valuable resource across industries and application types.

Natural Language Processing (NLP): Businesses use AI IaaS to train and fine-tune models for chatbots, sentiment analysis, and document summarization.

Computer Vision: Retailers and manufacturers leverage AI IaaS for defect detection, object tracking, and visual search applications.

Predictive Analytics: From finance to healthcare, predictive modeling with AI Inference is used for forecasting trends, risk assessment, and personalized recommendations.

Generative AI: With models such as GPT and Claude, AI IaaS powers the creation of synthetic text, images, and code. Startups and enterprises use generative AI infrastructure as a service to reduce compute costs while accelerating creativity.

Autonomous Systems: Industries like logistics and automotives use AI IaaS to simulate and test autonomous navigation systems without needing extensive on-prem labs.

How to Implement AI Infrastructure as a Service

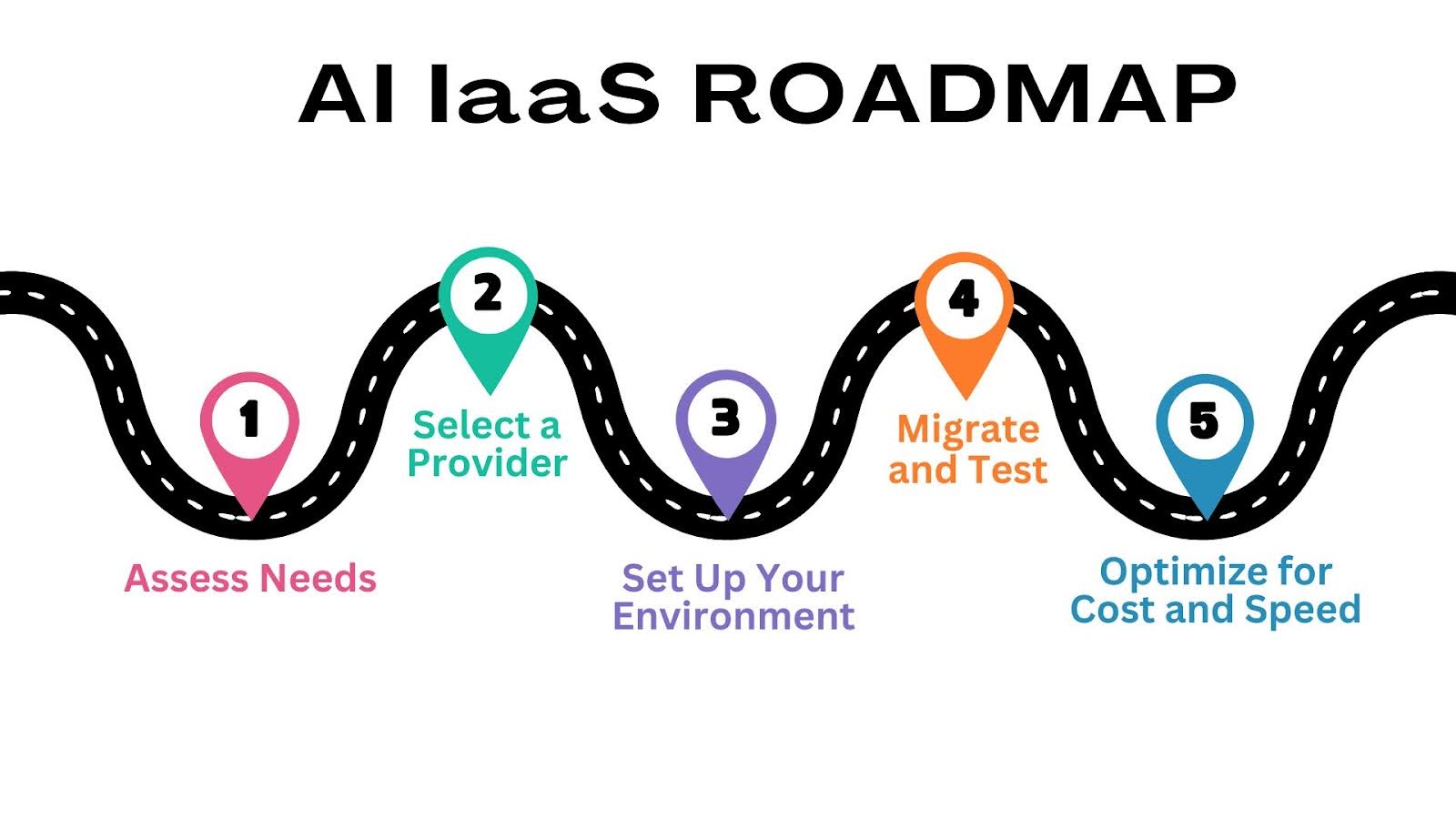

Adopting AI IaaS requires thoughtful planning and alignment with your organization’s goals. Here’s a simplified five-step roadmap to help get you started:

1. Assess Needs

Start by clearly defining what your AI workloads require. This foundational step determines the scale, performance, compliance, and architecture constraints you’ll need your AI infrastructure to support.

To get started, your enterprise should:

Understand your data volume and where it originates (e.g., cloud, on-prem, edge)

Assess compute intensity: training vs. inference, batch vs. real-time, GPU needs

Identify model complexity and how frequently you expect to retrain or redeploy

Clarify compliance requirements and data residency needs

Map out business goals and integration points with other systems or teams

2. Select a Provider

Instead of settling for general-purpose cloud platforms with GPU instances, it may be better to seek out vendors with purpose-built AI infrastructure solutions. The goal is to select a provider that fits your performance, scalability, and governance needs.

When selecting the best provider to fit your organizational needs, ensure you:

Evaluate whether vendors offer AI-optimized model deployment pipelines

Compare latency performance, especially for real-time applications

Look for built-in observability and performance monitoring

Consider how easily you can scale across regions or hybrid environments

Prioritize vendors that support Kubernetes-native deployments and open standards to avoid lock-in

3. Set Up Your Environment

This step involves configuring your AI stack and establishing the technical foundation for building, training, and deploying models. You want a repeatable environment that scales as needed and is easy to maintain.

Here is what the setup process entails:

Deploying virtual machines or containers based on your use case

Configuring the necessary AI/ML frameworks

Establishing persistent and high-speed storage connections for model and dataset access

Using infrastructure templates or AI-ready images, where possible, to save time

Integrating DevOps and MLOps tooling early to support automation and reproducibility

4. Migrate and Test

Once your environment is ready, move your existing models and datasets over. This phase ensures your pipelines work as expected and helps identify gaps in performance or compatibility.

This is what a typical framework looks like:

Transfer models, training datasets, and preprocessing pipelines to the new environment

Run tests for both batch and real-time inference to measure latency and throughput

Validate integrations with APIs, databases, or frontend apps

Monitor memory, GPU usage, and model performance during inference

Iterate on deployment scripts or CI/CD flows to catch issues early

5. Optimize for Cost and Speed

Once deployed, your AI IaaS setup should be fine-tuned for cost efficiency and operational performance. This ongoing process ensures that your infrastructure scales with your business without runaway costs.

Proper optimization requires your team to:

Use autoscaling to avoid overpaying for unused compute or underpowering your AI workloads

Explore instance reservation or spot markets to lower baseline infrastructure costs

Offload less time-sensitive jobs to lower-cost compute tiers or batch queues

Evaluate hybrid or multi-cloud strategies to shift workloads closer to data or users

Continuously track and adjust based on performance KPIs and GPU utilization

Challenges of AI SaaS for Enterprise AI

Given the complexity of running AI at scale, many organizations are tempted to use AI SaaS platforms that offer convenience and fast time to productivity. These platforms often provide resources for model hosting, inference, and pipelines. However, the AI SaaS approach comes with notable limitations.

Lock-In: Many AI SaaS platforms implement proprietary tooling, workflows, and abstractions that are incompatible with broader industry standards. The deeper an organization builds on a given AI SaaS, the harder it becomes to switch providers. This results in a long-term dependence on a single vendor's roadmap and pricing.

Limitations on Security and Control: For organizations in regulated industries or those with strict data governance needs, AI SaaS can present risks. Many platforms limit customers' ability to control where models and data are located, complicating compliance with EU and other data sovereignty regulations.

Performance Limitations: Certain AI applications (such as low-latency interactive services) require models to run physically close to end users on-premises, at the edge, or in specific cloud regions. AI SaaS platforms are often not optimized for these deployment patterns.

Building Your Own AI Infrastructure

For organizations with unique data privacy requirements or highly customized AI workloads, building a custom generative AI infrastructure may be more appropriate than relying solely on a service provider. In practice, dynamically shifting cloud resource usage up and down with application traffic to cut costs is often an uphill battle, making custom options a better alternative.

This approach begins with deploying custom hardware, which is specifically tuned to support heavy AI training and inference jobs. Next comes the optimization of the software stack, including AI frameworks that are tailored for performance.

Model lifecycle management is another essential layer, providing:

Tools for version control

Experimentation tracking

Performance monitoring

Additionally, automated data pipelines are necessary for models to continuously receive and process input data. Orchestration platforms also help automate the ingestion, preprocessing, and labeling of massive datasets. Notably, Security and governance must be integrated at every level, from identity management to access controls and logging.

Although a custom approach demands higher investment and expertise, it grants full control over infrastructure. Tailor-made AI infrastructure can also yield competitive advantages, especially for companies creating proprietary generative models.

Overcoming Limitations with Open, Flexible AI Infrastructure Solutions from Mirantis

While AI SaaS platforms offer convenience, they often come with trade-offs such as vendor lock-in, limited control over data and models, and challenges in meeting compliance requirements. Of course, building your own AI infrastructure from scratch can address many of these limitations, but doing so requires ongoing investments of significant time and expertise.

To address these issues, organizations often turn to solutions that provide the flexibility and control of open-source infrastructure without sacrificing ease of use. Mirantis k0rdent AI emerges as a compelling alternative, offering a Kubernetes-native, open-source platform designed for deploying and managing AI inference workloads at scale.

Key advantages include:

Deployment Flexibility: Run AI inference applications across public clouds, private data centers, and edge environments, ensuring optimal performance and compliance with sovereign data requirements.

Avoidance of Vendor Lock-In: Leverage CNCF-standard Kubernetes and an open AI stack to maintain complete control over your infrastructure and avoid dependency on proprietary cloud services.

Optimized Resource Utilization: Maximize GPU utilization and dynamically scale AI workloads with built-in monitoring and traffic routing capabilities.

Enhanced Security and Compliance: Protect sensitive data, automatically enforce policies, and maintain data sovereignty with secure, open-source solutions.

By adopting solutions like Mirantis k0rdent AI, organizations can achieve the same scalability and time to market as AI IaaS platforms while gaining the flexible configurability and cost-effectiveness of open-source infrastructure.

Book a demo today and see how Mirantis helps you deploy scalable, open, and secure AI infrastructure as a service, without vendor lock-in.

)

)

)

)

)