The Complete Guide to Edge Computing Architecture

)

Most enterprises rely on traditional cloud-based infrastructure for its agility, scalability, and other benefits, but it also has key limitations that make it difficult to support many modern workloads and emerging use cases. The main disadvantages of traditional cloud-based infrastructure are its high latency, bandwidth constraints, dependency on central data centers, and rising costs.

A compelling alternative that addresses these issues is edge computing, which carries out data processing on or near the devices generating and storing it, which solves many of the problems associated with traditional cloud-based infrastructure.

This guide explores edge computing architecture — from benefits to best practices — and how platforms like open source k0rdent can help enterprises unlock its full potential.

Key highlights:

Edge computing architecture brings computation closer to the source, reducing latency and cutting bandwidth costs.

Core components include edge devices, edge gateways, edge servers, network layers, and cloud integrations.

Enterprises benefit from edge computing due to faster response times, improved security, real-time application support, and scalability.

What Is Edge Computing Architecture?

Edge computing architecture is the overall framework that is used to carry out edge computing. Unlike cloud computing, which uses remote processing, edge computing uses localized processing and takes place on or near the device gathering data.

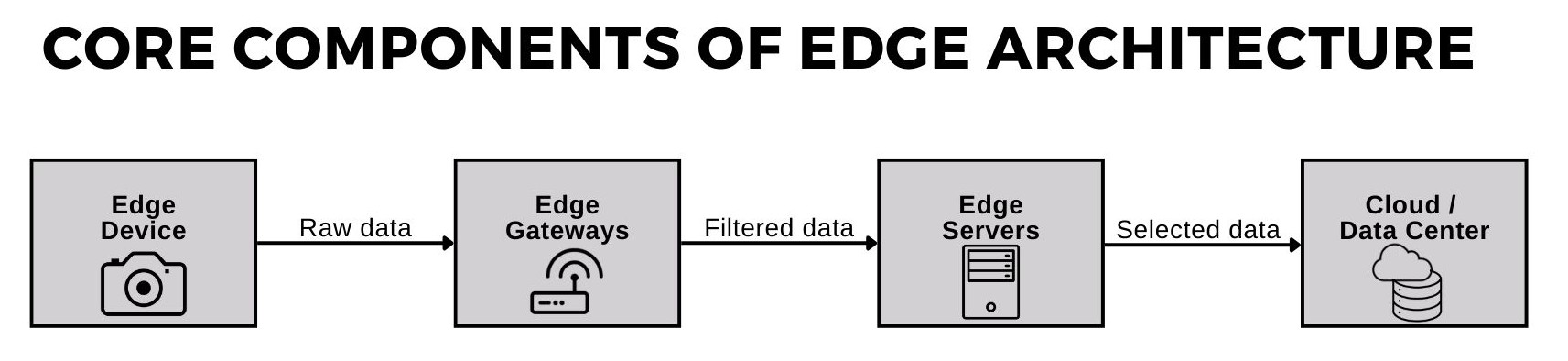

Core Components of Edge Architecture

There are several components that must come together to build a scalable edge architecture. Let’s take a closer look:

Edge Device: The first piece is the actual edge device, such as an IoT device, camera, or sensor. This is where the raw data is generated, and is the first point of contact in the system. Only minimal processing, like data filtering, is performed here.

Edge Gateways: These are where data from multiple devices is aggregated. Basic analytics and preprocessing, such as aggregation and format conversion, also take place here.

Edge Servers: Local processing for real-time applications happens here, along with running containerized workloads or AI inference models. This is also where critical data is temporarily held before being synced to the cloud.

Network Layer: Using LAN, 5G, Wi-Fi, or satellite, this is where edge components are connected to each other and the cloud.

Cloud or Data Center: Provides long-term storage, in-depth analytics, and model training, along with centralized management and orchestration.

Benefits of Edge Computing Architecture

Edge computing offers distinct benefits when compared to traditional cloud-based computing. The reduced latency, lower costs, improved reliability, heightened security, and increased flexibility all make edge computing a powerful option for many modern workloads and emerging use cases.

Reduced Latency

One of the main benefits of edge computing is the reduction in latency due to local processing. Since data does not have to be sent to the cloud for processing, response times are significantly faster. These near-instant results are crucial for time-sensitive applications like autonomous vehicles or medical monitors, where delayed responses can put human lives at risk.

Lower Bandwidth Cost

Edge computing also reduces network congestion and operating costs because very little data needs to be sent to the cloud. This also improves performance in environments where bandwidth is limited and cuts down on the cost of constant high-speed connections.

Improved Reliability

Because edge computing uses local processing, it has far fewer dependencies. For example, edge computing is not as reliant on a stable internet connection and can continue operations even during outages. This means significantly less downtime risk for mission-critical applications. Edge computing is also preferred in extreme situations where internet connectivity is unreliable, such as monitoring coral reef health in the middle of the ocean.

Heightened Security

Edge computing keeps data on-site, which makes it easier to control. Local processing eliminates the need for data transmission, reducing possible exposure. Additionally, edge computing supports local encryption. All of this keeps regulated or sensitive data exceptionally protected, making compliance with data regulations much smoother.

Increased Flexibility

The relatively isolated processing of edge computing makes it much more flexible than traditional solutions. Edge computing facilitates growth in connected devices without adding to central servers. It’s also possible to use hybrid scaling with cloud resources or to deploy new edge nodes as demand increases.

What Is an Edge Server?

An edge server is a server that carries out processing close to the data generation source. The fact that edge servers process and store data locally is especially important in remote or bandwidth-constrained environments, as it removes the need for sending data to the cloud.

For example, edge servers can be used on offshore oil rigs to check pressure levels, detect leaks, and monitor equipment performance. In remote environments like this, traditional cloud-based infrastructure does not provide the same level of reliability.

Edge vs. Cloud Computing: What’s the Difference?

Traditional cloud computing centralizes data processing in data centers, while edge computing keeps processing closer to the actual data source. Aside from this core difference, there are several other factors that differentiate edge computing and cloud computing:

| Feature | Edge Computing | Cloud Computing |

| Processing | Local, near device | Centralized in a data center |

| Latency | Extremely low, near-instant | Higher, dependent on distance |

| Bandwidth | Reduced, selective data transfer | Heavy, continuous transfer |

| Reliability | Works offline or intermittently | Needs stable connectivity |

| Security | Local data storage improves control | More exposure due to transit |

Clearly, there are distinct differences between edge computing and cloud computing. However, it is not necessary to choose one or the other; hybrid models can give you the best of both worlds. A hybrid model, or the edge cloud, uses the local processing of edge computing while relying on the cloud for scalability and analytics. This creates a combination of real-time responsiveness and long-term intelligence, which helps optimize cost, performance, and reliability.

Top Enterprise Applications of Edge Computing

Enterprises have many unique applications of edge computing; here are the top edge compute use cases:

Autonomous Vehicles: Low-latency edge computing is used for processing camera inputs. This is key for both safety and navigation processing.

Healthcare: Edge computing is used for analyzing patient monitoring devices, where every second counts. Local processing can also help monitor hospital equipment analytics to maintain performance standards.

Manufacturing: Manufacturing equipment logs can be processed locally in order to enhance maintenance routines. Real-time monitoring helps with production and can reduce errors.

Retail Analytics: Edge computing can provide real-time insights on consumer behavior in-store and streamline inventory management.

Smart Cities: Real-time traffic management, energy optimization, and public safety monitoring all rely on edge computing for quick and local processing.

How to Build an Edge Computing Infrastructure

Gaining the benefits of edge computing may not be as difficult as you think. Here’s an overview of the steps to build an edge computing infrastructure:

Define requirements: Begin by identifying target use cases, so that latency, throughput, and uptime requirements can be defined. It’s also helpful to estimate the data volume that will be generated at the device, and to determine real-time vs batch processing needs. Another important point is any regulatory or data residency requirements that apply to the situation.

Select edge locations: When choosing edge sites near data sources, the number of locations must be balanced against the cost and complexity. Environmental factors, such as temperature, power, and physical security, also come into play here.

Choose hardware: Picking suitable hardware is essential to success. Edge servers should have adequate CPU, memory, and storage, and IoT sensors must be compatible with edge protocols. Make sure that all hardware is durable, especially in harsh environments or remote locations.

Deploy management software: The next step is to install operating systems and deploy apps with orchestration tools. You should also centralize device registration and policy management and implement secure remote access.

Integrate with central systems: Data pipelines connecting the edge location to the cloud or data center need to be set up, although only required or filtered data should be synced.

Test Performance: Once the real workloads are underway, it’s time to conduct thorough latency, accuracy, and security testing at each site. Hardware resilience can also be tested through simulated power loss or adverse weather.

Common Edge Computing Challenges Faced by Enterprises

There are clearly many edge computing benefits. However, the road to implementation is not always smooth, as there are various issues that can complicate edge computing. Here are some common edge computing challenges that enterprises may face:

Complex Device and Network Management: Distributed devices require coordinated upkeep and configuration. The variation in hardware types and firmware versions only adds to the complexity.

Security Risks due to Distributed Endpoints: It is more difficult to enforce security at remote sites, and each device is a potential attack surface. Inconsistent patching can leave systems exposed, and inter-device communication may be vulnerable to interception.

Limited Resources: It is not always possible to run resource-heavy tools locally, especially on smaller edge devices. Constrained CPU, memory, or storage may necessitate offloading tasks, which increases latency.

Complex Cloud Integration: Inconsistent network connections can disrupt data synchronization, while bandwidth costs increase for large data transfers. Compatible APIs are also needed between edge and cloud platforms.

Edge Computing Best Practices

Edge computing examples make it clear that it’s very powerful and can bring significant advantages to an enterprise if used correctly. Edge computing best practices make sure that AI workflows are working as efficiently as possible and can deliver maximum performance. Here are some best practices to keep in mind:

Standardize Hardware and Protocols

When using edge computing, standardization will help streamline the process. The goal here is to simplify deployment, reduce maintenance complexity, and ensure consistent performance. To achieve this, make sure to:

Select a small set of approved hardware models for edge devices and servers

Create and maintain a hardware compatibility list for all deployments

Train IT staff, platform engineers, and DevOps engineers on standard configurations

Document all installation requirements for future consistency

Containerize Applications

Using containerized applications is a great way to make edge computing more efficient. Containerization improves portability, deployment speed, and ease of updates. When containerizing your applications:

Package applications in containers

Use lightweight orchestrators for small edge deployments

Maintain a central container registry with signed, verified images

Implement CI/CD pipelines for automatic testing and building

Regularly update base images for security patches and dependency updates

Prioritize Security

While creating an edge computing pipeline, security should be a top priority at every step. Protecting devices, data, and networks from threats is paramount. To implement robust security, you should:

Encrypt data in transit and at rest

Apply multi-factor authentication for device and system access

Set up least privilege access policies for users and applications

Scan regularly for vulnerabilities

Automate Monitoring

As with any system, edge computing systems flourish with continuous monitoring. Automated monitoring improves uptime, reduces the need for manual intervention, and makes proactive maintenance easier. To get the full benefits of monitoring and logging, you can:

Take advantage of central monitoring dashboards such as Prometheus and Grafana

Set up alerts for CPU, memory, disk, and latency thresholds

Use self-healing scripts to restart failed services automatically

Keep testing environments up-to-date for validation before mass rollouts

Design for Scalability

Edge computing can quickly grow out of hand if the right precautions are not taken. Designing for scalability future-proofs the system for growth, whether that’s the number of devices, volume of data, or amount of workloads. To keep your systems future-prrof, make sure to:

Choose a modular infrastructure that can incorporate new devices without needing a major redesign

Hardware should have upgradeable storage and memory that can adapt to changing needs

Implement horizontal scaling at the edge with additional nodes

Handle workload distribution with cluster-based orchestration

Prepare for multi-site expansion by standardizing replication processes

Enhance Edge Computing Environment Management with k0rdent

Managing an edge computing environment at scale requires tools that are lightweight, secure, and designed for highly distributed deployments. k0rdent is an open source Kubernetes management platform that makes it easy for enterprises to deploy, manage, and scale Kubernetes-based workloads across edge devices.

k0rdent is built on k0s, a minimal, self-contained Kubernetes distribution with zero dependencies that can run in very resource-constrained edge computing environments, such as ARMv7 industrial automation controllers.

k0rdent gives organizations centralized control over their entire edge infrastructure while upholding performance and security.

Key features include:

Centralized Management: Deploy, monitor, and update workloads from a single control plane.

Built-in Security: End-to-end encryption, zero-trust networking, and role-based access controls.

Hybrid Cloud Support: Seamless integration with cloud services for analytics and storage.

Scalable Deployment: Add new edge sites and nodes without complex configuration.

Book a demo today and see how Mirantis can help your team manage edge computing architecture.

)

)

)

)

)